ext::ai

This reference documents the Gel

ext::ai extension components, configuration options, and database APIs.

Enabling the Extension

The AI extension can be enabled using the extension mechanism:

using extension ai;Configuration

The AI extension can be configured using configure session or configure current branch:

configure current branch

set ext::ai::Config::indexer_naptime := <duration>'PT30S';UI

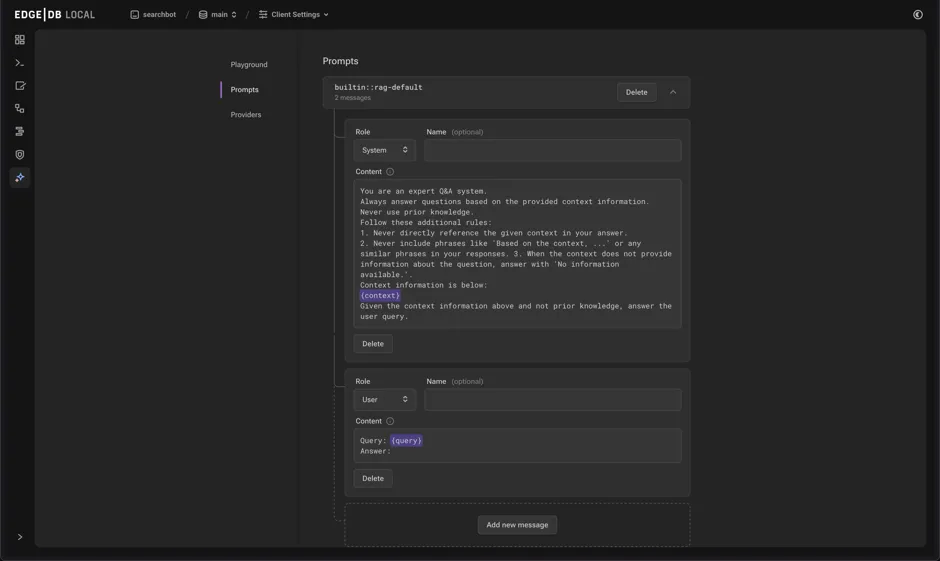

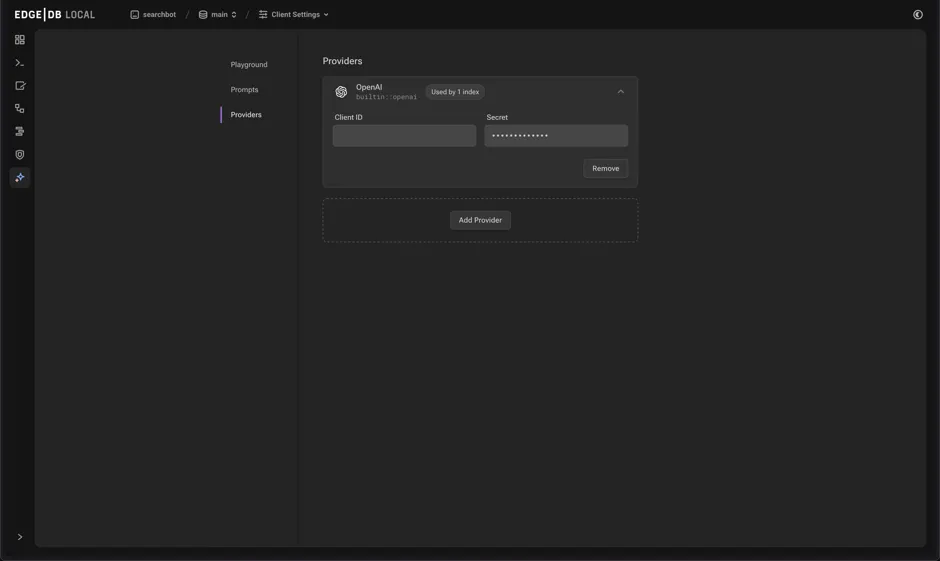

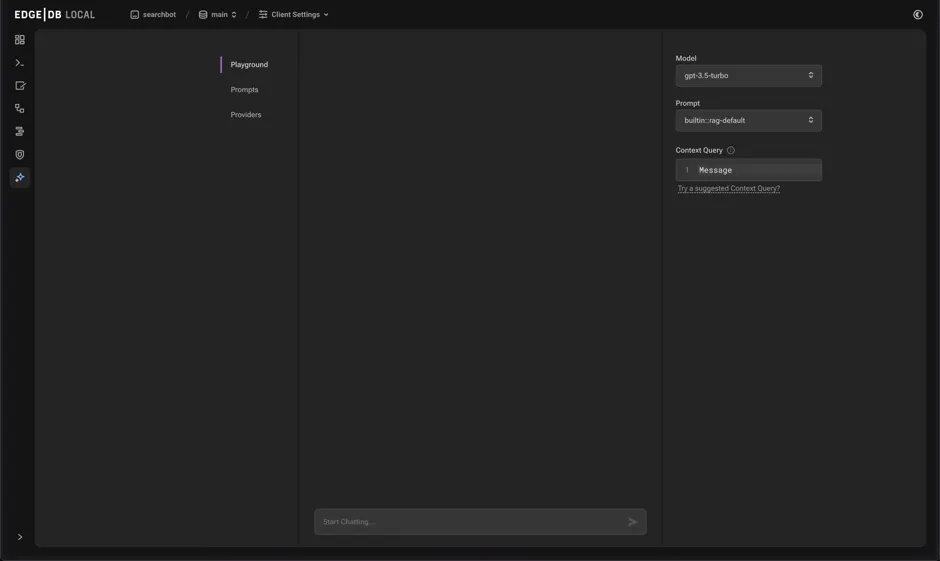

The AI section of the UI can be accessed via the sidebar after the extension has been enabled in the schema. It provides ways to manage provider configurations and RAG prompts, as well as try out different settings in the playground.

Playground tab

Provides an interactive environment for testing and configuring the built-in RAG.

Components:

-

Message window: Displays conversation history between the user and the LLM.

-

Model: Dropdown menu for selecting the text generation model.

-

Prompt: Dropdown menu for selecting the RAG prompt template.

-

Context Query: Input field for entering an EdgeQL expression returning a set of objects with AI indexes.

Index

The ext::ai::index creates a deferred semantic similarity index of an

expression on a type.

module default { type Astronomy { content: str; deferred index ext::ai::index(embedding_model := 'text-embedding-3-small') on (.content); } };

Parameters:

-

embedding_model- The name of the model to use for embedding generation as a string. -

distance_function- The function to use for determining semantic similarity. Default:ext::ai::DistanceFunction.Cosine -

index_type- The type of index to create. Currently the only option is the default:ext::ai::IndexType.HNSW. -

index_parameters- A named tuple of additional index parameters:-

m- The maximum number of edges of each node in the graph. Increasing can increase the accuracy of searches at the cost of index size. Default:32 -

ef_construction- Dictates the depth and width of the search when building the index. Higher values can lead to better connections and more accurate results at the cost of time and resource usage when building the index. Default:100

-

-

dimensions: int64 (Optional) - Embedding dimensions -

truncate_to_max: bool (Default: False)

Functions

|

Returns the indexed expression value for an object with an ext::ai::index. | |

|

Searches objects using their ai::index. |

Returns the indexed expression value for an object with an ext::ai::index.

Example:

Schema:

module default {

type Astronomy {

topic: str;

content: str;

deferred index ext::ai::index(embedding_model := 'text-embedding-3-small')

on (.topic ++ ' ' ++ .content);

}

};Data:

db> ... ... ...

insert Astronomy {

topic := 'Mars',

content := 'Skies on Mars are red.'

}db> ... ... ...

insert Astronomy {

topic := 'Earth',

content := 'Skies on Earth are blue.'

}Results of calling to_context:

db>

select ext::ai::to_context(Astronomy);

{'Mars Skies on Mars are red.', 'Earth Skies on Earth are blue.'}Searches objects using their ai::index.

Returns tuples of (object, distance).

If the query is a str, the ai extension will make an embedding

request to the provider and use the result to compute distances.

To prevent unwanted provider calls, this functionality may only be

used by roles with the ext::ai::perm::provider_call

permission.

db> ...

with query := <array<float32>><json>$query

select ext::ai::search(Knowledge, query);

{

(

object := default::Knowledge {id: 9af0d0e8-0880-11ef-9b6b-4335855251c4},

distance := 0.20410746335983276

),

(

object := default::Knowledge {id: eeacf638-07f6-11ef-b9e9-57078acfce39},

distance := 0.7843298847773637

),

(

object := default::Knowledge {id: f70863c6-07f6-11ef-b9e9-3708318e69ee},

distance := 0.8560434728860855

),

}Scalar and Object Types

Provider Configuration Types

|

Enum defining supported API styles | |

|

Abstract base configuration for AI providers. |

Provider configurations are required for AI indexes and RAG functionality.

Example provider configuration:

configure current database

insert ext::ai::OpenAIProviderConfig {

secret := 'sk-....',

};All provider types require the secret property be set with a string

containing the secret provided by the AI vendor.

ext::ai::CustomProviderConfig requires an ``api_style property be set.

Abstract base configuration for AI providers.

Properties:

-

name: str (Required) - Unique provider identifier -

display_name: str (Required) - Human-readable name -

api_url: str (Required) - Provider API endpoint -

client_id: str (Optional) - Provider-supplied client ID -

secret: str (Required) - Provider API secret -

api_style: ProviderAPIStyle (Required) - Provider's API style

Provider-specific types:

-

ext::ai::OpenAIProviderConfig -

ext::ai::MistralProviderConfig -

ext::ai::AnthropicProviderConfig -

ext::ai::CustomProviderConfig

Each inherits from ext::ai::ProviderConfig with provider-specific defaults.

Model Types

|

Abstract base type for AI models. | |

|

Abstract type for embedding models. | |

|

Abstract type for text generation models. |

Embedding models

OpenAI (documentation)

-

text-embedding-3-small -

text-embedding-3-large -

text-embedding-ada-002

Mistral (documentation)

-

mistral-embed

Ollama (documentation)

-

nomic-embed-text -

bge-m3

Text generation models

OpenAI (documentation)

-

gpt-3.5-turbo -

gpt-4-turbo-preview

Mistral (documentation)

-

mistral-small-latest -

mistral-medium-latest -

mistral-large-latest

Anthropic (documentation)

-

claude-3-haiku-20240307 -

claude-3-sonnet-20240229 -

claude-3-opus-20240229

Ollama (documentation)

-

llama3.2 -

llama3.3

When using RAG, It is possible to specify a text generation model using a URI, combining the provider name (in lower case), and the model name.

-

eg.

"openai:gpt-5" -

eg.

"anthropic:claude-opus-4-20250514"

Using this form allows text generation from models which are not explicitly

instantiated as a ext::ai::TextGenerationModel

Abstract type for embedding models.

Annotations:

-

embedding_model_max_input_tokens- Maximum tokens per input -

embedding_model_max_batch_tokens- Maximum tokens per batch. Default:'8191'. -

embedding_model_max_batch_size- Maximum inputs per batch. Optional. -

embedding_model_max_output_dimensions- Maximum embedding dimensions -

embedding_model_supports_shortening- Input shortening support flag

Indexing Types

|

Enum for similarity metrics. | |

|

Enum for index implementations. |

Prompt Types

|

Enum for chat roles. | |

|

Type for chat prompt messages. | |

|

Type for chat prompt configuration. |

Example custom prompt configuration:

insert ext::ai::ChatPrompt {

name := 'test-prompt',

messages := (

insert ext::ai::ChatPromptMessage {

participant_role := ext::ai::ChatParticipantRole.System,

content := "Your message content"

}

)

};PermissionsAdded in v7.0

Gives permission to make ai provider calls.

Required to call ext::ai::search() using text directly

instead of an already generated embedding.